Beyond crystal balls: How to make good forecasts

Science shows mounds of data and some math are key to predicting future events.

Forget about crystal balls and tarot cards. Mathematics and a mountain of data offer a much more reliable way to predict the future.

selimaksan/E+/Getty Images

People have always tried to predict the future. Will the crops do well this year? Do those clouds mean rain? Is the tribe on the other side of the valley likely to attack?

In ancient times, people used lots of different methods to make predictions. Some studied the patterns of tea leaves left in the bottom of a cup. Others tossed bones on the ground and made forecasts from the way they landed. Some even studied the entrails, or guts, of dead animals to forecast the future. Only in modern times have scientists had much luck seeing what is truly likely to happen in the weeks or years ahead. They don’t need a crystal ball. Just plenty of data and a little math.

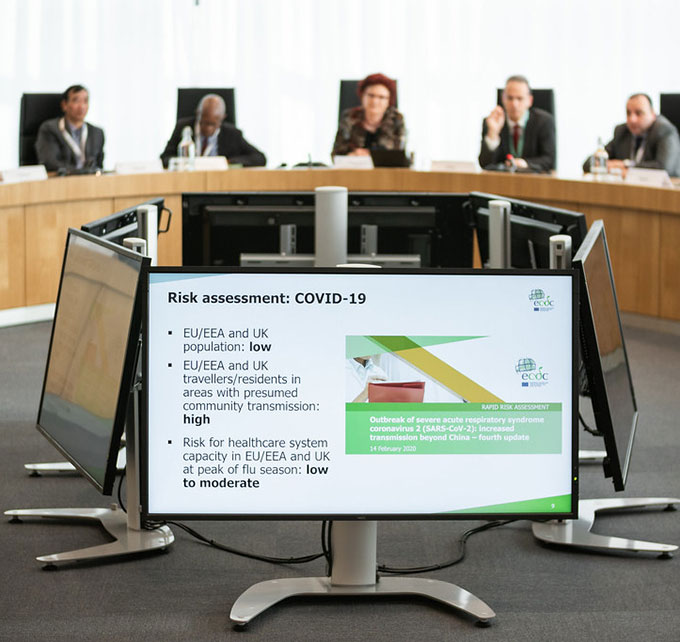

Statistics is a field of math used to analyze data. Researchers use it to predict all sorts of things. Will having more police in neighborhoods reduce crime? How many lives can be saved from COVID-19 if everyone wears masks? Will it rain next Tuesday?

To make such predictions about the real world, forecasters create a fake world. It’s called a model. Often models are computer programs. Some are full of spreadsheets and graphs. Others are a lot like video games, such as SimCity or Stardew Valley.

Natalie Dean is a statistician at the University of Florida in Gainesville. She tries to predict how infectious diseases will spread. In 2016, U.S. mosquitoes were spreading the Zika virus throughout southern states. Dean worked with scientists at Northeastern University in Boston, Mass., to figure out where Zika was likely to show up next.

This team used a complex computer model to simulate outbreaks. “The model had simulated people and simulated mosquitoes,” Dean explains. And the model let the people live simulated lives. They went to school. They went to work. Some traveled on planes. The model kept changing one or more details of those lives.

After each change, the team ran the analysis again. By using all types of different situations, the researchers could predict how the virus might spread under a particular set of conditions.

Not all models are as fancy as that one. But they all need data to make their predictions. The more data and the better it represents real-world conditions, the better its predictions are likely to be.

The role of math

Tom Di Liberto is a climate scientist. As a kid he loved snow. In fact, he got excited every time a TV weather forecaster said weather models were predicting snow. He grew up to be a meteorologist and climatologist. (And he still loves snow.) Now he figures out how weather patterns — including snowfall — might change as Earth’s climate continues to warm. He works for the company CollabraLink. His office is at the National Oceanic and Atmospheric Administration’s Climate Change Office. It’s in Silver Spring, Md., just outside Washington, D.C.

Weather and climate models, Di Liberto says, are all about breaking down what happens in the atmosphere. Those actions are described by equations. Equations are a mathematical way to represent relationships between things. They might be showing relationships affecting temperature, moisture or energy. “There are equations in physics that allow us to predict what the atmosphere is going to do,” he explains. “We put those equations in our models.”

For example, one common equation is F = ma. It explains that force (F) equals mass (m) times acceleration (a). This relationship can help predict future wind speed. Similar equations are used to predict changes in temperature and humidity.

“It’s just basic physics,” Di Liberto explains. That makes it easy to come up with equations for weather and climate models.

Pattern recognition

But what if you’re building a model that lacks such obvious equations? Emily Kubicek works with these kinds of things a lot.

She’s a data scientist in the Los Angeles, Calif., area. She works for the Walt Disney Company in their Disney Media & Entertainment Distribution business segment. Let’s imagine you’re trying to figure out who will enjoy a new ice cream flavor, she says. Call it coconut kumquat. You put into your model data about all of the people who sampled the new flavor. You include what you know about them: their gender, age, ethnicity and hobbies. And, of course, you include their favorite and least favorite flavors of ice cream. Then you put in whether or not they liked the new flavor.

Kubicek calls these her training data. They will teach her model.

As the model sorts through these data, it looks for patterns. It then matches traits of the people with whether they liked the new flavor. In the end, the model might find that 15-year-olds who play chess are likely to enjoy coconut-kumquat ice cream. Now she introduces new data to the model. “It applies the same mathematical equation to the new data,” she explains, to predict whether someone is going to like the ice cream.

The more data you have, the easier it is for your model to detect whether there is a true pattern or just random associations — what statisticians call “noise” in the data. As scientists feed the model more data, they refine the reliability of its predictions.

Hot dirt

Of course, for the model to do its prediction magic, it needs not only lots of data, but also good data. “A model is kind of like an Easy Bake Oven,” says Di Liberto. “With the Easy Bake Oven, you put the ingredients in one end and a little cake comes out the other end.”

What data you need will differ depending on what you’re asking the model to predict.

Michael Lopez is a statistician in New York City for the National Football League. He might want to predict how well a running back will do when he gets the ball. To predict that, Lopez collects data about how many times that football player has broken through a tackle. Or how he performs when he has a certain amount of open space after getting the ball.

Lopez looks for very specific facts. “Our job is to be precise,” he explains. “We need the exact number of tackles the running back was able to break.” And, he adds, the model needs to know “the exact amount of open space in front of [the tackle] when he got the ball.”

The point, Lopez says, is to turn large sets of data into useful information. For example, the model might make a graph or table that shows under what circumstances players get injured in a game. This could help the league make rules to boost safety.

But do they ever get it wrong? “All the time,” says Lopez. “If we say something was only 10 precent likely to happen and it happens 30 percent of the time, we probably need to make some changes to our approach.”

This happened recently with the way the league measures something called “expected rushing yardage.” This is an estimate of how far a team is likely to carry a football down the field. There’s plenty of data on how many yards were gained. But those data don’t tell you why the ball-carrier was successful or why he failed. Adding more precise information helped the NFL improve these predictions.

“If you have poor ingredients, it doesn’t matter how good your math is or how good your model is,” says Di Liberto. “If you put a pile of dirt into your Easy Bake Oven, you’re not going to get a cake. You’re just going to get a hot pile of dirt.”

Wash, rinse, repeat

As a rule, the more complex the model and the more data used, the more reliable a prediction will be. But what do you do when mountains of good data don’t exist?

Look for stand-ins.

There’s still much to learn about the virus that causes COVID-19, for instance. Science does, however, know a lot about other coronaviruses (a few of which cause colds). And a lot of data exist about other diseases that spread easily. Some are at least as serious. Scientists can use those data as stand-ins for data on the COVID-19 virus.

With such stand-ins, models can begin to forecast what the new coronavirus might do. Then scientists put a range of possibilities into their models. “We want to see if the conclusions change with different assumptions,” explains Dean at Florida. “If no matter how much you change the assumption, you get the same basic answer, then we feel much more confident.” But if they change with new assumptions, “then that means this is something we need more data about.”

Burkely Gallo knows the problem. She works for an organization that provides research to the National Weather Service (NWS) to help improve its weather forecasts. Her job: Forecast tornadoes. She does this at the federal Storm Prediction Center in Norman, Okla.

Tornadoes can be devastating. They are fairly rare and can pop up in a flash and vanish minutes later. That makes it hard to collect good data on them. That data shortfall also makes it a challenge to predict when and where the next tornado will occur.

In these cases, ensembles are very useful. Gallo describes these as a collection of forecasts. “We change the model in a small way, then run a new forecast,” she explains. “Then we change it in another small way and run another forecast. We get what’s called an ‘envelope’ of solutions. We hope that reality falls somewhere in that envelope.”

Once she’s accumulated a large number of forecasts, Gallo looks to see if the models were right. If tornadoes don’t show up where they were predicted, she goes back and refines her model. By doing that on a bunch of forecasts from the past, she works to improve future forecasts.

And the forecasts have improved. For example, on April 27, 2011, a series of tornadoes slammed through Alabama. The Storm Prediction Center had forecast which counties these storms would hit. The NWS even predicted at what time. Still, 23 people were killed. One reason is that owing to a history of false alarms about tornado warnings, some people didn’t take shelter.

The NWS office in Birmingham, Ala., set out to see if it could reduce false alarms. To do this, it added more data to its forecasts. These were data such as the height of the base of a rotating cloud. Also, it looked at which types of air circulation were more likely to spawn tornadoes. This helped. Researchers managed to cut the share of false positives by almost a third, according to a NWS report.

Di Liberto says this “hind-casting” is the opposite of forecasting. You look back at what you know and test it in models to see how well it would have predicted what actually happened. Hind-casting also helps researchers get to know what works and what doesn’t in their models.

“For example, I might say, ‘Oh, this model tends to overdo precipitation with hurricanes in the Atlantic,’” says Di Liberto. Later, when a forecast with this model predicts 75 inches of rain, he says, one can assume it’s an exaggeration. “It’s like you have an old bike that tends to veer in one direction. You know that, so you adjust as you ride.”

A game of chance

When our ancestors consulted entrails, they may have gotten very definite answers to their questions, even if they were often wrong. You’d better stockpile grain, buddy. There’s famine ahead. Math doesn’t give such definite answers.

No matter how good the data, how good the model or how clever the forecaster, predictions don’t tell us what will happen. They instead tell us the probability — how likely it is — that something will happen. That’s why weather forecasters say there’s a 70 percent chance of rain during tomorrow’s ball game or a 20 percent chance of snow on Christmas. The better the model and the more skilled the forecaster, the more reliable that prediction will be.

There’s a huge amount of data about the weather. And forecasters get to practice and test their results every day. That’s why weather forecasts have improved dramatically in recent years. Five-day weather forecasts are as accurate today as next-day forecasts were in 1980.

Still there is always some uncertainty. And forecasting things that happen fairly rarely, such as global pandemics, can be hardest to get right. There are simply too few data to describe all of the actors (like the virus) and the conditions. But math is the best way to make fairly sound forecasts with whatever data are available.