Seeing the world through a robot’s eyes

A new kind of camera promises better visuals for self-driving cars, drones and other robots

This image was captured by a new camera that can focus on different objects. Normal-light version shows what the human eye sees. Red and blue versions offer clues to distance. Red objects are closer, blue ones farther, mid-range ones appear purple.

Stanford Computational Imaging

With any luck, the future will bring more self-driving cars and flying drones to deliver pizza and other goodies. For those robots to get around safely, though, they need to both see their surroundings and understand what they’re seeing. A new kind of camera developed by engineers in California may help them do just that. It sees more than what meets our eyes.

The new camera combines two powerful traits. First, it takes exceptionally wide images. Second, it collects data about all the light bouncing around the scene. Then, an onboard computer uses those data to quickly analyze what the camera sees. It can calculate the distance to something in the picture, for example. Or it can refocus a specific spot within the image.

Such calculations would help self-driving cars or drones better recognize what’s around them. What kinds of things? These might include other vehicles, obstacles, intersections and pedestrians. The technology could be used to build cameras that help their host vehicle make faster decisions — and use less power — than do the cameras on drones and vehicles now. A car might then use those data to navigate more safely.

Self-driving or –flying vehicles “have to make decisions quickly,” notes Donald Dansereau. He’s an electrical engineer at Stanford University, in Palo Alto, Calif. Along with scientists there and at the University of California San Diego, he helped build the new camera. That team presented its new camera at a conference in July.

The big picture

As with other cameras, this one uses lenses. Lenses are transparent, so they let light through. And they’re curved, so they can bend the rays of incoming light. For example, the lens in the human eye focuses light on sensitive receptor cells at the back of the eye.

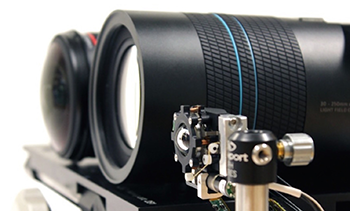

The new camera uses a spherical lens. It’s about the size of a wild cherry and made of two concentric shells of glass. Behind it sits a grid of 200,000 microlenses. Each of these is smaller than the width of the finest hair.

Here’s how they work together.

The spherical lens allows the camera to capture light across a wide field of view. This is what can be seen through a lens without moving it. If you could see everything that surrounds you at once, you would have a 360-degree field of view — a full circle. A person’s eye has a field of view of about 55 degrees, or some one-sixth of a full circle. (To see this, cover one eye and look straight ahead. What you can see without moving your eye or head is your eye’s field of view.)

The new camera is mounted on a rotating arm. This gives it a field of view of 140 degrees, or almost half a circle. That means it can see nearly everything in front of it.

To capture information about a scene, the innovative camera uses a technology called light field photography. The term “light field” refers to all of the light in a scene. Light reaches a camera lens only after it bounces off one or more objects in the scene. To imagine this, think of light particles, called photons, as pinballs that race through a pinball machine (bouncing off of obstacles in the machine). When a photon reaches a microlens, a sensor within the lens detects it and records both the location of the light and the direction it came from.

Because there are so many microlenses in the grid, the camera can capture detailed data about the light. The onboard computer can turn those data into information about where things are, and how they’re moving — similar to how the brain might work as its host walks around a room.

The camera can use that information in many ways. A self-driving car, for example, could use it to “see” nearby cars, and avoid them. It could focus on one specific car (like one in front) or another (like one coming toward it). Since light-field photography records all the light in a scene, the camera can analyze and change the picture using the data — even after the image had been “taken.”

These abilities give the camera a big advantage over human vision. People’s eyes don’t capture all the information about the distance and movement of objects. Instead, we move our heads around to see things from different angles. Then our brains combine those views. The new camera can see more than a person’s two eyes do. “It gets the same information, but like it has more than two eyes,” explains Dansereau.

Bug-eyed robots?

The camera combines powerful lenses with fast computer programs. This approach is called computational photography. It’s a field of science that is growing more popular. But long before human scientists tried it, Mother Nature came up with her own version: bug eyes.

Insects’ eyes work similarly to the microlens array in the new camera, notes Young Min Song. He’s an engineer at the Gwangju Institute of Science and Technology in South Korea. Song designs digital cameras, although he has not worked on this one. He notes that the eyes of bees and dragonflies, for example, collect light information from 30,000 tiny hexagonal elements, or facets. Each facet collects light from a slightly different direction. The microlenses in the new camera act like facets. Song has previously developed a camera that used hundreds of microlenses as facets.

Like the bugs’ brains, the new camera has to analyze all of those light data. For that, it uses an onboard computer to quickly run calculations. Getting the camera to work required more than a year of trial and error, notes Dansereau.

One new innovation: the lens. His team tried spherical lenses of various sizes before it found one that had a wide field of view and worked with the microlenses that were capturing data on the light field.

The group’s prototype, or working model, is big and bulky. It also requires a rotating arm to “see” in different directions. Song thinks these problems can be solved. For now, however, the camera “is not applicable to actual use,” he points out.

But it is getting closer. Dansereau says his team is now working on a version that uses more sensors instead of a rotating arm. They also want to make it small and light enough for drones or self-driving cars. They hope to add the ability to capture video. They also plan on publishing their plans so that other scientists can build the cameras.

“We want to get these things out there so people can use them,” he says. “Our ultimate goal is to make something useful.”

This is one in a series presenting news on technology and innovation, made possible with generous support from the Lemelson Foundation.