AI can guide us — or just entertain

Smart machines are helping humans do their jobs better — and helping robots become better at what people do

Apple’s Siri and other smartphone voice-recognition systems are an everyday example of a digital assistant that exhibits artificial intelligence.

AntonioGuillem/iStockphoto

By Dinsa Sachan

“Have you heard the rumor about butter? … Never mind — I shouldn’t spread it.”

Go ahead and roll your eyes. We’ll wait.

This groaner is just one of the many, many terrible jokes that Amazon’s “personal assistant” software, Alexa, will tell you — if you ask. But Alexa can do a lot more than make bad puns. Many people start their mornings by asking Alexa for the weather forecast or the latest news. A device that houses the software can also play music from your favorite playlists, keep a shopping list, order takeout food, answer trivia questions, send voice messages and even run “smart” home controls like thermostats.

Alexa is a form of artificial intelligence, or AI for short. But this digital personal assistant is just one of many AI systems that have have become a part of modern life. Another well-known one is AlphaGo. It’s an AI system designed by Google that recently beat a human champion, Lee Sedol, at the strategy board game Go. Other examples of AI abound. A spam-filtering assistant can detect which messages are pure junk. Then there are all of those self-driving vehicles that have started taking to the road.

Training AI systems to respond to problems with human-like intelligence — and learn from their mistakes — can take months, or even years.

Consider Alexa and similar software, such as Apple’s Siri. To do the tasks its human overlords ask, these systems must make sense of and then respond to sentences such as, “Alexa, play my Ed Sheeran playlist” or “Siri, what is the capital of India?” Computers can’t understand language as it is spoken by people. So AI researchers must find a way to help humans communicate with computers. The technology used to get computers to “understand” human speech or text is known as natural language processing. By natural language, computer scientists refer to the way people naturally talk or write.

To teach an AI system a task like understanding a sentence in English or responding to a person’s last move in a board game, scientists need to feed it lots of examples. To train AlphaGo, Google had to show it 30 million Go moves that people had made while playing the game. Then AlphaGo used what it learned by analyzing those plays (such as what moves won and which lost) as it played against different versions of itself.

During this practice, the program became so skilled and clever that it came up with novel moves — ones never seen in games between people.

Computers, software and devices that are powered by AI can do much more, however, than just play board games and music. They can perform serious tasks, such as helping kids learn math or helping doctors decide how to treat cancer.

The doctor’s computer will see you now

Scientists generate new information about diseases and treatments each and every day. According to one study, 2.5 million scientific papers were published worldwide in 2014 alone. A great many of these appeared in medical journals. Can doctors keep tabs on every new development in their field? Not a chance. But computers can.

Take Watson. IBM built this computer for one purpose — to answer people’s questions. Watson uses the same natural-language-processing approach that gives Alexa and Siri their gift of the gab. In 2011, Watson demonstrated just how good it was when it beat two flesh-and-blood champions answering questions on the TV show Jeopardy! That win earned Watson more than $77,000.

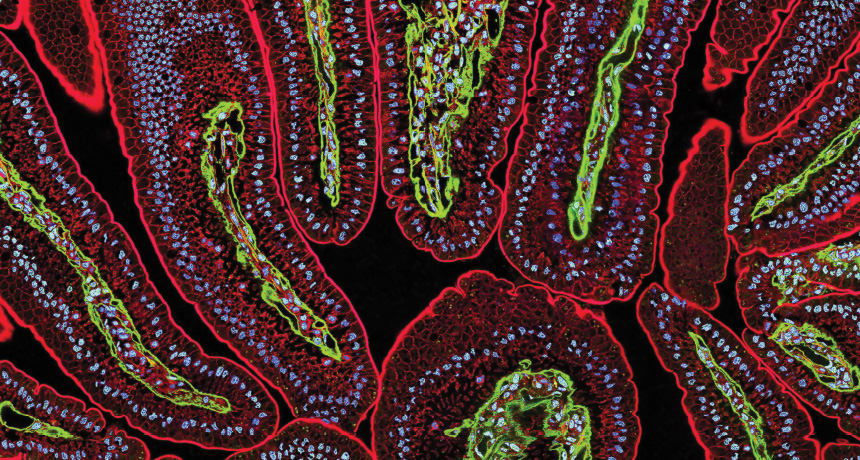

Clearly, Watson was a champ at answering trivia. Now IBM has given this quiz whiz a more serious job: helping doctors find the right treatments for cancer patients. In its new role, the program is known as Watson for Oncology. (Oncology refers to the study or treatment of cancer.)

Doctors at Memorial Sloan Kettering Cancer Center in New York City trained the program. Now a physician can ask this Watson system to recommend a treatment for a particular patient. Before it answers, the AI assistant quickly pulls up the patient’s medical history and lab reports. It also can review notes from doctors that are written in plain English. And it can tap into data on other Memorial Sloan Kettering patients — and how well their treatments worked. That’s thousands of cancer patients a year. Watson for Oncology can even sift through millions of pages of medical research to learn what other researchers have been reporting about a particular drug’s safety or how well it works.

In the end, a doctor may choose not to follow Watson for Oncology’s recommendation. When that happens, the software will log that information too. “It continues to learn as it gathers more and more experience,” explains Andrew Norden. He is a doctor and was a senior officer at IBM.

In one study, researchers at Manipal Hospital in Bangalore, India, found that Watson’s recommendations for treating breast tumors matched with those of the experts’ nine out of every 10 times. In another study, researchers at Memorial Sloan Kettering found that Watson for Oncology agreed with doctors’ recommendations for breast cancer patients in 98 out of every 100 instances.

“It’s a very valuable assistant to the doctors,” says Amit Rauthan. He is a doctor at Manipal Hospital. He uses Watson for Oncology as part of his practice. Yet he and other doctors don’t always follow Watson’s advice. Why? Many patients in India, for example, cannot afford the treatments that were the system’s first choices.

Watson for Oncology is one of many AI systems that hospitals now use. As helpful as such systems are, computer scientist Joel Rosiene warns that they are not a cure-all. He works at Eastern Connecticut State University in Willimantic.

First, he notes, not all AI systems explain why they recommended what they did. If something goes wrong, it now can be hard to figure out how to tweak the system to prevent future errors. “With humans, we can ask them what led them to a conclusion,” he notes.

Also, Rosiene asks, what if an AI system recommends some treatment based on poorly conducted studies? Rosiene says scientists working on AI systems in clinics and hospitals need to be aware of such risks.

Even though they’re not perfect, AI systems are probably a permanent part of medicine. But they won’t soon replace doctors, says computer scientist James Hendler. He works at Rensselaer Polytechnic Institute in Troy, N.Y. “Ten years from now, you won’t want to go to a doctor who doesn’t have an AI helping her,” he says. Then again, he adds, “I don’t think you will go to an AI who’s doesn’t have a doctor helping it.”

A math tutor at your beck and call

Learning complex math concepts can frustrate some students, especially if their teachers are too busy to give them much extra attention.

To help bridge that gap, more than 2,500 U.S. schools are using AI-powered software called Mathia. (Until last year, it was called Cognitive Tutor.) The system helps teach a host of math concepts to students from grades 6 through 11.

Mathia is different from ordinary math software. Most math problems can be solved in more than one way. Mathia can recognize each student’s learning style. Then it will try to serve up customized clues — ones especially suited to that individual.

“The software watches as the student solves each problem,” says Steven Ritter. He’s a top scientist at the company that created Mathia: Carnegie Learning of Pittsburgh, Pa. As a student dives into a math problem, Mathia gives feedback. It may offer hints that reflect the student’s skills at — or observed problems with — that type of problem.

Mathia and other tools like it are called “intelligent tutoring systems.” In a 2014 study, John Nesbit reviewed studies on such systems. This educational psychologist works at Simon Fraser University in Vancouver, Canada. Together, the studies he reviewed had included more than 14,000 students. And kids who learned from intelligent-tutoring systems scored much better than did students taught in large classrooms. The difference in test results, he says, would have been enough to boost the students’ grades.

One-on-one teaching still out-performs the software, he notes. But that kind of teaching isn’t available to everyone. “One-to-one tutoring by a human teacher is great,” Nesbit notes. But school budgets “will never be able to provide it to each student.” He thinks that intelligent-tutoring systems might “help to fill that gap.”

Computers with the soul of an artist?

Without question, computers are aces at crunching huge volumes of data. Creativity, however, has always been their weakness. But some AI systems are now creating art from scratch.

It all started with Harold Cohen back in the 1970s. He was an artist who also taught at the University of California, San Diego.

When Cohen died in 2016, at age 87, he left behind a remarkable achievement: AARON. This was a computer program he designed. It could create beautiful and original artworks on a computer screen.

Cohen fed the program data on basic drawing and painting concepts. Using those, it learned how to draw living things — plants and animals — as well as objects such as chairs and desks. One drawing AARON created in 1991 shows two women in colorful clothes. A potted plant sits beside them.

Cohen reprogrammed AARON several times throughout his career. Each time, he tried to improve its artistic ability.

He started by teaching it about mostly abstract shapes. In the 1990s, AARON learned basic knowledge about the world — what plants, people and certain objects look like. Later, the system learned about perspective. For example, it learned how objects in the background should look smaller than nearer objects.

In the late 1990s, AARON learned to mix colors to produce new shades. It also learned what colors were appropriate for shapes representing plants and people. When AARON “painted,” Cohen said, he never interfered with its artistic process. As the program ran, it drew on its own, using what it knew of shapes and artistic concepts.

Since Cohen’s death, AARON has sat unused.

Cohen believed his machine was truly making art. “The program is being creative,” he said in a 1987 video interview. What he meant was that it created original drawings — images “that no one has seen before, including me.”

Not everyone agrees. Some researchers argue that Cohen had a lot more control over AARON than he let on. “Cohen did pioneering work,” says Dan Ventura. He’s a computer scientist at Brigham Young University in Provo, Utah. He argues that “the products that AARON produced were the kinds of products Cohen would produce himself, because Cohen told it how to produce them.”

Cohen tinkered with AARON’s design over the years. Here’s how the final version worked. First, AARON created a sketch for some painting on a workstation that was connected to a touchscreen and to a smaller screen that displayed coloring and brush options. Cohen then selected a color and chose whatever type of brush effect he wanted to use to fill in AARON’s sketch. Finally, Cohen filled the sketches by moving his fingers across the touchscreen. Think of it as digital fingerpainting.

Even though Cohen’s work is controversial, most researchers agree AARON was a great example of a human-machine collaboration. AARON’s work has been presented in major art museums around the world, including the Tate Gallery in London, England, and the San Francisco Museum of Modern Art in California.

While AARON helped Cohen produce art, a four-armed, marimba-playing robot called Shimon helps artists generate unique music. A team led by Gil Weinberg has been honing Shimon’s skills. Weinberg is a professor at Georgia Institute of Technology in Atlanta.

Weinberg’s team fed Shimon 5,000 songs. These included a mix of pop, jazz, classical and rock tunes. The researchers also gave Shimon millions of snippets of music by great musicians. That way it could learn a variety of musical rhythms and themes. Such exercises helped Shimon tell one musical style from another.

Weinberg’s former student, Mason Bretan, was one of Shimon’s trainers. He recently received his PhD from Georgia Tech. He explains that Shimon was trained to detect patterns that humans tend to notice while listening to music. For example: What instruments tend to perform jazz pieces? What pitches and chords are common in Western classical music?

Shimon’s four arms give it an advantage over human musicians. It can play faster than people can. And it can handle more complex pieces. Because its arms can stretch far and wide, Shimon also can play a greater range of pitches at once.

When asked to improvise — play something novel that it just “thought” up — Shimon can mix different styles and create something surprising. It can jam with human musicians and come up with original music on the fly. It even bobs its robot head to the beat, just like any psyched musician would. “It’s the visual and social cues that humans use that we have tried to put in Shimon as well,” explains Weinberg.

Weinberg wants Shimon to produce music that people can’t. It’s not necessarily better music — just different. The goal, says Weinberg, is to create “a creative being who happens to be a robot.”

There are still a few things people can do that Shimon cannot. For example, people can produce music that can make someone cry or smile. By getting Shimon to perform with people, Weinberg says, he aims to achieve the best of both worlds. Robots bring their superb mechanical ability. People, meanwhile, bring emotions and expressions to the table.

Smart machines won’t do your homework or teach you how to play the guitar. They can, however, help you learn more effectively and create novel music. These amazing AI systems might just help you become the best version of yourself.

Kennedy Center/YouTube