A new test could help weed out AI-generated text

New ways to spot bot talk may reduce cheating and misinformation

Raidar is a new tool for identifying AI-generated text. It relies on a simple idea: AI models tend to make very few edits when asked to improve text that came from an AI model.

or Studio/DigitalVision Vectors/Getty Images Plus

Imagine that you’re helping judge a writing contest at your school. You want to make sure everyone did their own work. If someone used an artificial intelligence, or AI, model such as ChatGPT to write an entry, that shouldn’t count. But how can you tell whether something was written by AI? New research reveals a simple way to test whether a person wrote something or not. Just ask a bot to rewrite it.

“If you ask AI to rewrite content written by AI, it will have very few edits,” says Chengzhi Mao. When AI rewrites a person’s text, it typically makes many more changes.

Mao, Junfeng Yang and their colleagues designed a tool called Raidar. It’s a detector that uses AI rewriting to detect bot-generated text. Mao is a researcher at the Software Systems Lab at Columbia University in New York. Yang leads this lab.

Separating bot-talk from person-talk is “very important,” says Yang. Lots of AI writing has already flooded social media and product reviews. It has fueled fake news websites and spam books. Some students use AI to cheat on homework and tests. Tools like Raidar could help expose AI-powered cheaters and liars.

Raidar’s creators shared the tool at the International Conference on Learning Representations. That meeting was on May 7 in Vienna, Austria.

Weeding out AI

Mao regularly uses ChatGPT to help polish his own writing. For example, he sometimes asks the bot to rewrite and improve an email. He noticed that this bot can do a pretty good job the first time it rewrites something that he wrote. But if he asks it to improve an email again — revising its own bot writing — then it won’t change much.

“That’s how we got motivated,” Mao says. He realized the number of edits a bot makes to a piece of writing might say something about how the original text got written.

“It’s a pretty neat idea,” says Amrita Bhattacharjee. “Nobody had thought of it before.” Bhattacharjee is a PhD student at Arizona State University in Tempe. She has researched AI-generated text detection, but wasn’t involved in developing Raidar.

Do you have a science question? We can help!

Submit your question here, and we might answer it an upcoming issue of Science News Explores

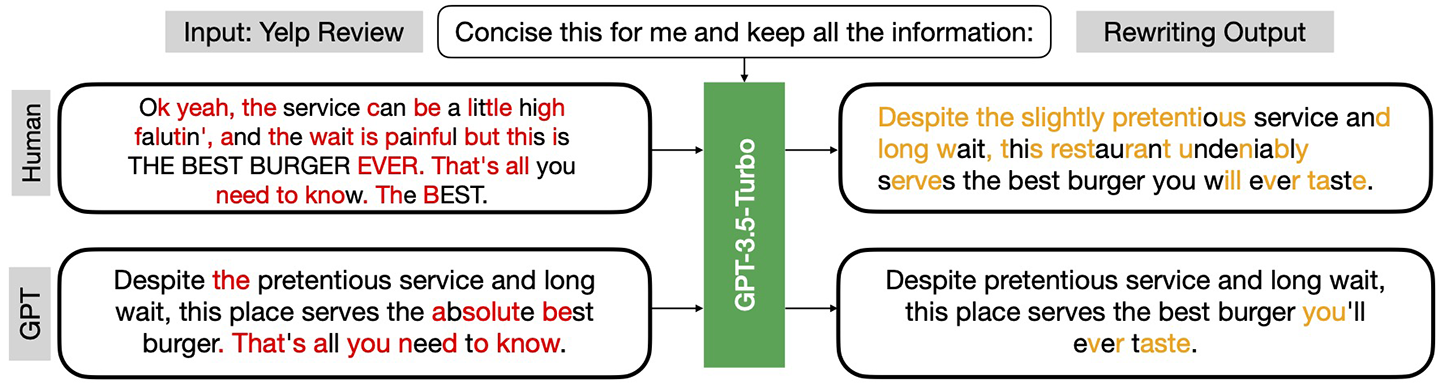

Raidar is a tool that determines whether text was likely AI-generated or not. To test Raidar, the team gathered writing samples from people and several different chatbots. They did this for a few kinds of text, including news, Yelp reviews, student essays and computer code. Then, the team had several AI models rewrite all the human-written and bot-written samples.

Next, the researchers used a simple computer program to calculate the number of changes between the original and edited version of each writing sample. This step doesn’t require any AI. Based on the number of changes in a revision, Raidar could sort writing samples into human-generated and AI-generated. This worked well even if the AI that did the rewriting was different from the AI that wrote the original sample.

Raidar’s sorting is not perfect. The tool sometimes identifies a human text as AI, or vice versa. But it performs better than other tools designed to detect AI-written text, the researchers found.

Most other tools use AI models and statistics to learn to recognize the kind of text that bots produce. These tools typically work best on longer passages of text. They may not work at all on short blurbs, like the ones found on social media or homework assignments. But Raidar works well even on text that’s just 10 words long.

A red flag

Yang and Mao’s team is working to make Raider an online tool that anyone can use. When it’s done, people could send text through the tool and find out if it was likely AI-generated or not.

Until then, the idea behind Raidar is easy for anyone to use, says Yang. “You don’t have to be a computer scientist or data scientist.” For example, a suspicious teacher could ask any chatbot to rewrite a student’s work. If the bot makes very few edits, that could be a red flag that the student may have used AI.

Bhattacharjee notes that teachers shouldn’t take action based on Raidar’s output alone. “The final judgment should not be based entirely on this tool,” she says. That’s because Raidar isn’t always correct. Also, some students may have good reasons to use AI. For example, AI can help clean up grammar.

Meanwhile, Yang is thinking about how something like Raidar might flag other types of AI-generated media. He’s now studying what happens if you ask an AI model to revise an image, video or audio clip. If the model makes a lot of edits, that could indicate original human work.