How artificial intelligence could help us talk to animals

What might sperm whales and other animals be saying to each other?

Sperm whales have the largest brains of any animal on Earth. They make clicks to communicate and socialize. Using artificial intelligence, researchers are working to learn how to translate what these clicks mean.

Amanda Cotton

A sperm whale surfaces, exhaling a cloud of misty air. Its calf comes in close to drink milk. When the baby has had its fill, mom flicks her tail. Then, together the pair dive down deep. Gašper Beguš watches from a boat nearby. “You get this sense of how vast and different their world is when they dive,” he says. “But in some ways, they are so similar to us.”

Sperm whales have families and other important social relationships. They also use loud clicking sounds to communicate. It seems as if they might be talking to each other.

Beguš is a linguist at the University of California, Berkeley. He got the chance, last summer, to observe sperm whales in their wild Caribbean habitat off the coast of the island nation of Dominica. With him were marine biologists and roboticists. There were also cryptographers and experts in other fields. All have been working together to listen to sperm whales and figure out what they might be saying.

They call this Project CETI. That’s short for Cetacean Translation Initiative (because sperm whales are a type of cetacean).

Sperm whale clicks

A CETI tag attached to a sperm whale captured these sounds in January 2022. You can hear several different whales clicking. Does it seem like it could be a conversation?

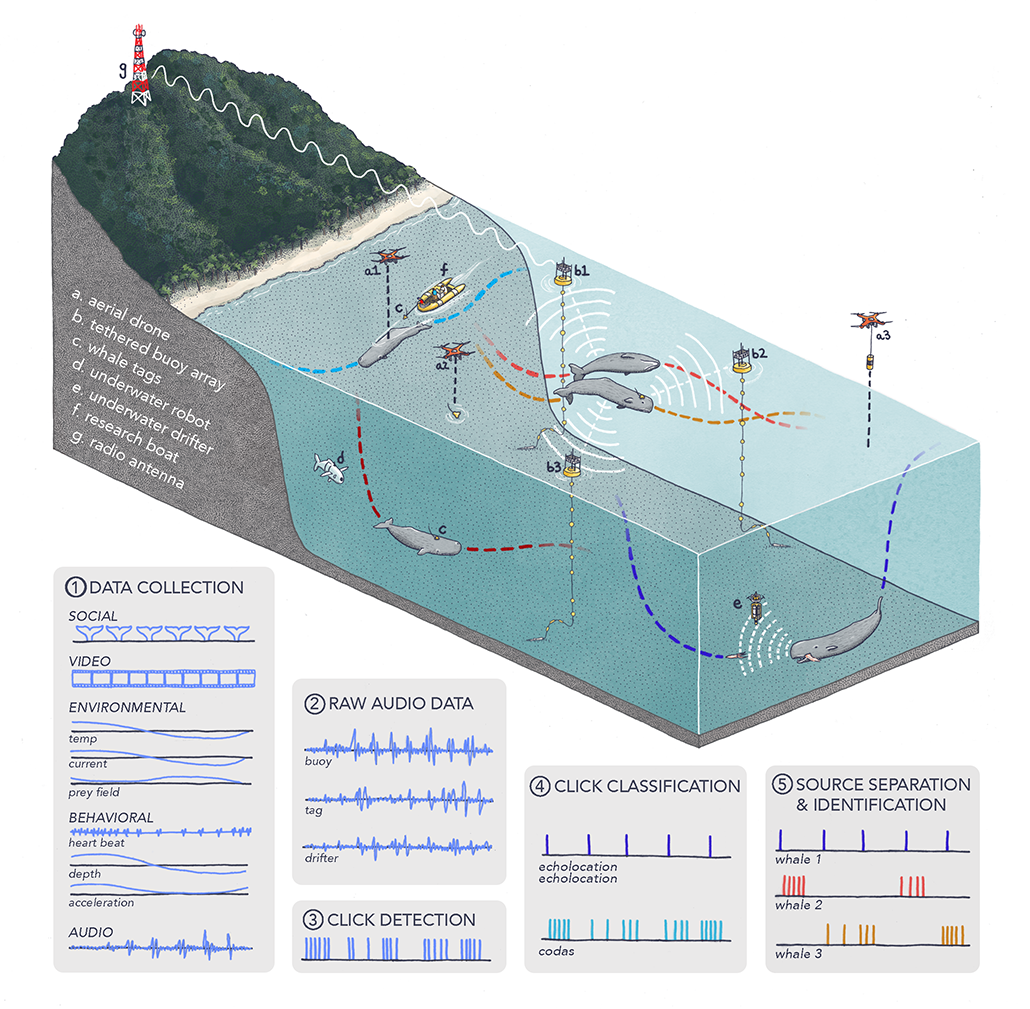

To get started, Project CETI has three listening stations. Each one is a cable hanging deep into the water from a buoy at the surface. Along the cable, several dozen underwater microphones record whale sounds.

From the air, drones record video and sounds. Soft, fishlike robots do the same underwater. Suction-cup tags on the whales capture even more data. But just collecting all these data isn’t enough. The team needs some way to make sense of it all. That’s where artificial intelligence, or AI, comes in.

A type of AI known as machine learning can sift through vast amounts of data to find patterns. Thanks to machine learning, you can open an app and use it to help you talk to someone who speaks Japanese or French or Hindi. One day, the same tech might translate sperm-whale clicks.

Project CETI’s team is not the only group turning to AI for help deciphering animal talk. Researchers have trained AI models to sort through the sounds of prairie dogs, dolphins, naked mole rats and many other creatures. Could their efforts crack the codes of animal communication? Let’s take a cue from the sperm whales and dive in head first.

Watch out for the person wearing blue!

Long before AI came into the picture, scientists and others have worked toward understanding animal communication. Some learned that Vervet monkeys have different calls when warning of leopards versus eagles or pythons. Others discovered that elephants communicate in rumbles too low for human ears to hear. Bats chatter in squeaks too high for our hearing. Still other groups have explored how hyenas spread scents to share information and how bees communicate through dance. (By the way: Karl von Frisch won a Nobel Prize in 1973 for his work in the 1920s that showed bees can communicate information through dance.)

Do these types of communication systems count as language? Among scientists, that’s a controversial question. No one really agrees on how to define language, says Beguš.

Prairie dog alarm calls

Listen to these Gunnison’s prairie dog alarm calls. Can you hear differences between them?

Call 1:

Call 2:

Call 3:

The first is the call for a coyote. The second is for a dog. The sight of a human triggered the third call.

Human language has many important features. It’s something we must learn. It allows us to talk about things that aren’t in the here and now. It allows us to do lots more, too, such as invent new words. So a better question, Beguš says, is “what aspects of language do other species have?”

Con Slobodchikoff studied prairie dog communication for more than 30 years. A biologist based near Flagstaff, Ariz., his work turned up many surprising language-like features in the rodents’ alarm calls.

Prairie dogs live in large colonies across certain central U.S. grasslands. Like Vervet monkeys, these animals call out to identify different threats. They have unique “words” for people, hawks, coyotes and pet dogs.

But that’s not all they talk about. Their call for a predator also includes information about size, shape and color, Slobodchikoff’s research has shown.

In one 2009 experiment with a colony of Gunnison’s prairie dogs, three women of similar sizes took turns walking through a prairie dog colony. Their outfits were identical except that each time they walked through, they wore either a green, blue or yellow T-shirt. Each woman passed through about 30 times. Meanwhile, an observer recorded the first alarm call a prairie dog made in response to the intruder.

All the calls fit a general pattern for “human.” However, shoutouts about people in blue shirts shared certain features. The calls for yellow or green shirts shared different features. This makes sense, because prairie dogs can see differences between blue and yellow but can’t see green as a distinct color.

“It took us a long time to measure all these things,” says Slobodchikoff. AI, he notes, has the potential to greatly speed up this type of research. He has used AI to confirm some of his early research. He also suspects AI may discover “patterns that [we] might not have realized were there.”

Chirp chirp

What carries meaning?

AI’s ability to find hidden patterns is something that excites Beguš, too. “Humans are biased,” he says. “We hear what is meaningful to us.” But the way we use sound in our languages may not be anything like how animals use sound to communicate.

To build words and sentences, human languages use groups of letters called phonemes (FOH-neems). Meaning comes from the order of these phonemes. In English, swapping the phoneme “d” for “fr” makes the difference between “dog” and “frog.” In tonal languages, such as Chinese, using a high or low voice can also change the meaning of words.

Animal languages could use any aspect of sound to carry meaning. Slobodchikoff found that prairie dog calls contain more layers of frequencies than human voices do. He suspects that the way these layers interact helps shape meaning.

Before Project CETI began, researchers had already collected and studied lots of sperm whale clicks. They call a group of clicks a “coda.” A coda sounds a bit like Morse code. (Patterns of short and long beeps represent letters in Morse code.) Researchers suspected that the number and timing of the clicks in codas carried meaning.

Beguš built an AI model to test this.

His computer model contained two parts. The first part learned to recognize sperm whale codas. It worked from a collection of sounds recorded in the wild. The second part of the model never got to hear these sounds. It trained by making random clicks. The first part then gave feedback on whether these clicks sounded like a real coda.

Over time, the second part of the model learned to create brand-new codas that sounded very real. These brand-new codas might sound like nonsense to whales. But that doesn’t matter. What Beguš really wanted to know was: How did the model create realistic codas?

The number and timing of clicks mattered, just as the researchers had suspected. But the model revealed new patterns that the experts hadn’t noticed. One has to do with how each click sounds. It seems important that some frequencies are louder than others.

Beguš and his colleagues shared their findings March 20 at arXiv.org. (Studies posted on that site have not yet been vetted by other scientists.)

Part of their world

Once researchers know which features of sperm whale sounds are most important, they can begin to guess at their meaning.

For that, scientists need context. That’s why Project CETI is gathering so much more than just sounds. Its equipment is tracking everything from water temperatures around the whales to whether there are dangerous orcas or tasty squid nearby. “We are trying to have a really good representation of their world and what is important to them,” Beguš explains.

This gets at a tricky aspect of animal translation — one that has nothing to do with technology. It’s a philosophical question. To translate what whale sounds mean, we need to figure out what they talk about. But how can we understand a whale’s world?

In 1974, the philosopher Thomas Nagel published a famous essay: “What is it like to be a bat?” No matter how much we learn about bats, he argued, we’ll never understand what it feels like to be one. We can imagine flying or sleeping upside-down, of course. But, he noted, “it tells me only what it would be like for me to behave as a bat behaves.”

Imagining life as any other species poses the same problem. Marcelo Magnasco is a physicist at the Rockefeller University in New York City who studies dolphin communication. He notes that linguists have made lists of words common to all human languages. Many of these words — such as sit, drink, fire — would make no sense to a dolphin, he says. “Dolphins don’t sit,” he notes. “They don’t drink. They get all their water from the fish they eat.”

Similarly, dolphins likely have concepts for things that we never talk about. To get around, they make pulses of sound that bounce off certain types of objects nearby. This is called echolocation.

In water, sound waves pass through some objects. When a dolphin echolocates off a person or fish, it “sees” right through to the bones! What’s more, Magnasco notes, dolphins might be able to repeat the perceived echoes to other dolphins. This would be sort of like “communicating with drawings made with the mouth,” he says.

People don’t echolocate, so “how would we be able to translate that?” he asks. These and other experiences that have no human equivalent. And that could make it very difficult to translate what dolphins are saying.

Whistle whistle squeak whee!

Chatting with dolphins

Yet dolphins do share some experiences with people. “We’re social. We have families. We eat,” notes marine biologist Denise Herzing. She is the founder and research director of the Wild Dolphin Project in Jupiter, Fla. For almost 40 years, she has studied a group of wild Atlantic spotted dolphins. Her goal has been to find meaning in what they’re saying to each other.

This is very slow work. She points out, for instance, “We don’t know if they have a language — and if so, what it is.”

One thing researchers do know is that dolphins identify themselves using a signature whistle. It’s a bit like a name.

Dolphin signature whistles

A signature whistle is a bit like a dolphin’s name. Luna and Trimy are two adult female Atlantic spotted dolphins that Denise Herzing works with. But they don’t call themselves “Luna” or “Trimy.” They use these whistles.

Luna:

Trimy:

Early on, Herzing gave herself a signature whistle. She also records the whistles of the dolphins she works with. She uses a machine called CHAT box to play these whistles back to dolphins. “If they show up, we can say, ‘Hi, how are you?’” she explains.

Today, CHAT box runs on a smartphone. It contains more than just signature whistles. Herzing and her team invented whistle-words to identify things dolphins like to play with, including a rope toy. When the researchers and dolphins play with these items, the people use CHAT box to say the whistle-words. Some dolphins may have figured out what they mean. In a 2013 TED Talk, Herzing shared a video of herself playing the rope sound. A dolphin picked up the rope toy and brought it to her.

The next level would be for a dolphin to use one of these invented “words” on its own to ask for a toy. If it does, the CHAT box will decode it and play back the English word to the researchers. It already does this when the researchers play the whistle-words to each other. (One time a dolphin whistled a sound that the CHAT box translated as “seaweed.” But that turned out to be a CHAT box error, so the team is still waiting and hoping.)

Herzing is also using AI to sift through recorded dolphin calls. It then sorts them into different types of sound. Like the Project CETI team, she’s also matching sounds with behaviors. If a certain type of sound always comes up when mothers are disciplining calves, for example, that might help reveal structure or meaning. She notes that “the computer is not a magic box.” Asking it to interpret all this, she says, is “so much harder than you think.”

Robot chirps

Alison Barker uses AI to study the sounds of another animal: the naked mole rat. These hairless critters live in underground colonies. “They sound like birds. You hear them chirping and tweeting,” says this neurobiologist. She works at the Max Planck Institute for Brain Research. It’s in Frankfurt, Germany.

Naked mole rats use a particular soft chirp when they greet each other. In one study, Barker’s team recorded more than 36,000 soft chirps from 166 animals that lived in seven different colonies. The researchers used an AI model to find patterns in these sounds. AI, says Barker, “really transformed our work.” Without the tool, she says, it would have taken her team more than 10 years to go through the data.

Each colony had its own distinct dialect, the model showed. Baby naked mole rats learn this. And pups raised in a colony different from where they were born will adopt the new colony’s dialect, Barker found.

She also used an AI model to create fake soft chirps. These fit the pattern of each dialect. When her team played these sounds to naked mole rats, they responded to sounds that matched their dialect and ignored sounds that didn’t.

This means that the dialect itself — and not just an individual’s voice — must help these critters understand who belongs in their group.

Does the soft chirp mean “hello”? That’s Barker’s best translation. “We don’t understand the rules of their communication system,” she admits. “We’re just scratching the surface.”

Naked mole rat toilet call

The soft chirp seems to be how naked mole rats say “hello.” But they make other sounds. “They have a specific ‘toilet’ call,” Alison Barker says. Only the queen and breeding males use it. It’s like a song they sing as they urinate, she says.

Time will tell

AI has greatly sped up how long it takes to sort, tag and analyze animal sounds — as well as to figure out which aspects of those sounds might carry meaning. Perhaps one day we’ll be able to use AI to build a futuristic CHAT box that translates animal sounds into human language, or vice versa.

Project CETI is just one organization working toward this goal. The Earth Species Project and the Interspecies Internet are two others focused on finding ways to communicate with animals.

“AI could eventually get us to the point where we understand animals. But that’s tricky and long-term,” says Karen Bakker. She’s a researcher at the University of British Columbia in Vancouver, Canada.

Sadly, Bakker says, time is not on our side when it comes to studying wild animals. Across the planet, animals are facing threats from habitat loss, climate change, pollution and more. “Some species could go extinct before we figure out their language,” she says.

Plus, she adds, the idea of walking around with an animal translator may seem cool. But many animals might not be interested in chatting.

“Why would a bat want to speak to you?” she asks. What’s interesting to her is what we can learn from how bats and other creatures talk amongst each other. We should listen to nature in order to better protect it, she argues. For example, a system set up to record whales or elephants can also track their locations. This can help us avoid whales with our boats or protect elephants from poachers.

Conservation is one goal driving Project CETI. “If we understand [sperm whales] better, we will be better at understanding what’s bothering them,” says Beguš. Learning that a species has something akin to language or culture could also inspire people to work harder to protect it.

For example, some people consider prairie dogs to be pests. But Slobodchikoff has found that when he explains that prairie dogs talk to each other, “people’s eyes light up” in seeming appreciation of the species’ value.

When you protect an animal that has some version of language or culture, you’re not merely conserving nature. You’re also saving a way of life. Herzing says that dolphins deserve a healthy environment so their cultures can thrive.

In the future, instead of guessing at what animals might need, we might just be able to ask them.