Computers can now make fool-the-eye fake videos

It can put expressions and movements — even from other people — onto people on screen

A new computer program can manipulate the subjects in a video so that one of them mirrors the movements of someone else in some other video. Here, images of Russian President Vladimir Putin are altered to match those of former President Barack Obama.

H. KIM ET AL/ACM TRANSACTIONS ON GRAPHICS 2018

Share this:

- Share via email (Opens in new window) Email

- Click to share on Facebook (Opens in new window) Facebook

- Click to share on X (Opens in new window) X

- Click to share on Pinterest (Opens in new window) Pinterest

- Click to share on Reddit (Opens in new window) Reddit

- Share to Google Classroom (Opens in new window) Google Classroom

- Click to print (Opens in new window) Print

The idea that “the camera never lies” is so last century.

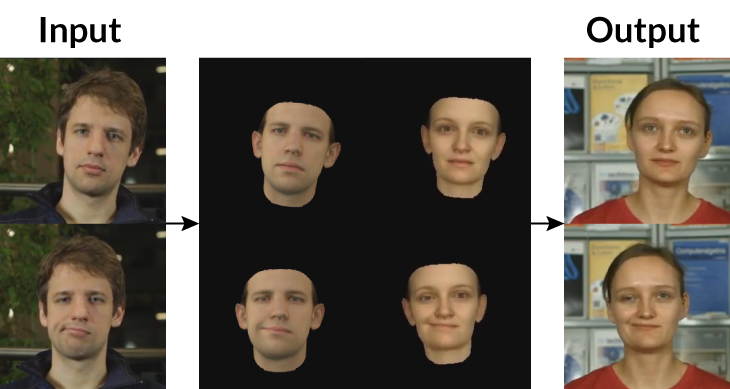

A new computer program can manipulate video in a compelling way. It can make an on-screen person mirror the movements and expressions of someone in a different video. And this program can tamper with far more than just facial expressions. It also can tweak head and torso poses and eye movements. The result: very lifelike fakes.

Researchers presented their video wizardry in Canada August 16. They were taking part in the 2018 SIGGRAPH meeting in Vancouver, British Columbia.

These video forgeries are “astonishingly realistic,” says Adam Finkelstein. He’s a computer scientist at Princeton University in New Jersey who was not involved in the work. Imagine this system creating dubbed films where the actors’ lips move to perfectly match a voiceover. That would allow dead actors to star in new movies. The computer would simply use old footage to reanimate the actors, he says, so that they now could speak and respond to the actions of others.

More worrisome: This technology could give internet users the power to take fake news to a whole level. It could put public figures into phony videos that seem totally realistic.

The computer starts by scanning two videos, frame by frame. It tracks 66 facial “landmarks” on a person. These landmarks may be points along the eyes, nose and mouth. By plotting where they are in each frame, they essentially can map how features move between frames — such as the lips, the tilt of the head, even where the eyes are directed.

In one test, the system mirrored former President Barack Obama’s expressions and movements onto Russian President Vladimir Putin. To do this, the program distorted Putin’s image. It morphed Putin so that the landmarks on his face and body now matched, frame-to-frame, those in a video of Obama.

The program also can tweak shadows. It can change Putin’s hair. It can even adjust the height of his shoulders to match a new head pose. The result was a video of Putin doing an eerily on-point imitation of Obama.

Computer scientist Christian Theobalt works at the Max Planck Institute for Informatics in Saarbrücken, Germany. His team tested its program on 135 volunteers. All of them watched five-second clips of real and forged videos. Afterward, they reported whether a clip appeared to be authentic or a fake.

The fakes fooled viewers about half the time. And these people may have been more suspicious of the doctored footage than normal. After all, they knew they were taking part in a study. They would have had far less reason to doubt the videos if they had merely viewed them online at home, presented as news. Even when the test participants were watching genuine clips, they tended to doubt one in every five clips as being real.

The new software is far from perfect. It can tweak only those videos shot with a stationary (unmoving) camera. Someone’s head and shoulders also must have been framed in front of an unchanging background. And the system could not shift someone’s pose too much. For instance, a clip of Putin speaking directly into the camera could not be edited to make him turn around. Why? The software wouldn’t know what the back of Putin’s head looked like.

Still, one can imagine how this type of digital puppetry could be used to spread dangerous misinformation.

Kyle Olszewski is a computer scientist at the University of Southern California in Los Angeles. “The researchers who are developing this stuff are getting ahead of the curve,” he says. With luck, what they’ve shown as being possible may encourage others to treat internet videos with more skepticism, he says.

What’s more, he adds: “Learning how to do these types of manipulations is [also] a step towards understanding how to detect them.” A future computer program, for instance, might probe the details of true and falsified videos to become an expert at spotting fakes.