Easy for you, tough for a robot

Engineers are trying to reduce robots’ clumsiness — and boost their common sense

A robot can easily calculate a winning chess move. But it would have a lot of trouble picking up and moving a chess piece. That’s because grasping and moving a chess piece isn’t nearly as easy as it seems.

baona/iStock/Getty Images Plus

You’re sitting across from a robot, staring at a chess board. Finally, you see a move that looks pretty good. You reach out and push your queen forward. Now it’s the robot’s turn. Its computer brain calculates a winning move in a fraction of a second. But when it tries to grab a knight, it knocks down a row of pawns. Game over.

“Robots are klutzes,” says Ken Goldberg. He’s an engineer and artificial intelligence (AI) expert at the University of California, Berkeley. A computer can easily defeat a human grandmaster at the game of chess by coming up with better moves. Yet a robot has trouble picking up an actual chess piece.

This is an example of Moravec’s paradox. Hans Moravec is a roboticist at Carnegie Mellon University in Pittsburgh, Penn., who also writes about AI and the future. Back in 1988, he wrote a book that noted how reasoning tasks that seem hard to people are fairly easy to program into computers. Meanwhile, many tasks that come easily to us — like moving around, seeing or grasping things — are quite hard to program.

It’s “difficult or impossible” for a computer to match a one-year-old baby’s skills in these areas, he wrote. Though computers have advanced by leaps and bounds since then, babies and kids still beat machines at these types of tasks.

It turns out that the tasks we find easy aren’t really “easy” at all. As you walk around your house or pick up and move a chess piece, your brain is performing incredible feats of calculation and coordination. You just don’t notice it because you do it without thinking.

Let’s take a look at several tasks that are easy for kids but not for robots. For each one, we’ll find out why the task is actually so hard. We’ll also learn about the brilliant work engineers and computer scientists are doing to design new AI that should help robots up their game.

Task 1: Pick stuff up

Goldberg has something in common with robots. He, too, is a klutz. “I was the worst kid at sports. If you threw me a ball, I was sure to drop it,” he says. Perhaps, he ponders, that’s why he wound up studying robotic grasping. Maybe he’d figure out the secret to holding onto things.

He’s discovered that robots (and clumsy humans) face three challenges in grabbing an object. Number one is perception. That’s the ability to see an object and figure out where it is in space. Cameras and sensors that measure distance have gotten much better at this in recent years. But robots still get confused by anything “shiny or transparent,” he notes.

The second challenge is control. This is your ability to move your hand accurately. People are good at this, but not perfect. To test yourself, Goldberg says, “Reach out, then touch your nose. Try to do it fast!” Then try a few more times. You likely won’t be able to touch the exact same spot on your nose every single time. Likewise, a robot’s cameras and sensors won’t always be in perfect sync with its moving “hand.” If the robot can’t tell exactly where its hand is, it could miss something or drop it.

Physics poses the final challenge. To grasp something, you must understand how that object could shift when you touch it. Physics predicts that motion. But on small scales, this can be unpredictable. To see why, put a pencil on the floor, then give it a big push. Put it back in its starting place and try again. Goldberg says, “If you push it the same way three times, the pencil usually ends up in a different place.” Very tiny bumps on the floor or the pencil may change the motion.

Despite these challenges, people and other animals grasp things all the time — with hands, tentacles, tails and mouths. “My dog Rosie is pretty good at grasping anything in our house,” says Goldberg. Millions of years of evolution provided brains and bodies with ways to adapt to all three challenges. We tend to use what Goldberg calls “robust grips.” These are secure grips that work even if we run into problems with perception, control or physics. For example, if a toddler wants to pick up a block to stack it, she won’t try to grab a corner, which might slip out of her grasp. Instead, she’s learned to put her fingers on the flat sides.

To help robots learn robust grips, Goldberg’s team set up a virtual world. Called DexNet, it’s like a training arena for a robot’s AI. The AI model can practice in the virtual world to learn what types of grasps are most robust for what types of objects. The DexNet world contains more than 1,600 different virtual 3-D objects and five million different ways to grab them. Some grasps use a claw-like gripper. Others use a suction cup. Both are common robot “hand” types.

In a virtual world, a system can have perfect perception and control, and a perfect understanding of physics. So to be more like the real world, the team threw in some randomness. For each grasp, they shifted either the object or grabber just a tad. Then they checked to see if the grasp would still hold the object. Every grasp got a score based on how well it held onto an object throughout such random changes.

After a robot has completed this training, it can figure out its own robust grasp for a real-world object it has never seen before.

Thanks to research like this, robots are getting much less clumsy. Maybe someday a grasping robot will be able to clean up your room.

Task 2: Get around the world

If someone plops you down in the middle of a building you’ve never been in before, you might feel a bit lost. But you could look around, find a door and get out of the room quickly without bumping into anything or getting stuck. Most robots could not do that. Researcher Antoni Rosinol first got interested in this problem while working with drones. Usually, someone pilots a drone via remote control. That’s because most drones can’t fly very well by themselves. “They can barely go forward without colliding against a tree,” notes Rosinol. He is a PhD student studying computer vision at the Massachusetts Institute of Technology (MIT) in Cambridge.

Perception is a big problem for many navigating robots, just as it is for robots that grasp things. The robot needs to map the entire space around itself. It needs to understand the shape, size and distance of objects. It also has to identify the best paths to get where it needs to go.

Computer vision has gotten very good at detecting and even identifying the objects around a robot. Some robots, advanced drones and self-driving cars do a very good job at getting around obstacles. But too often, they still make mistakes. One of the trickiest things for a navigating robot to handle is a large, blank space such as a ceiling, wall or expanse of sky. “A machine has a lot of trouble understanding what’s going on there,” Rosinol explains.

Another issue is that robots don’t understand anything about human living spaces. A robot trying to get out of an unfamiliar room will usually circle around, looking for openings everywhere — including on the floor. If it finds a bathroom, it may go in, not realizing that this room won’t lead anywhere else.

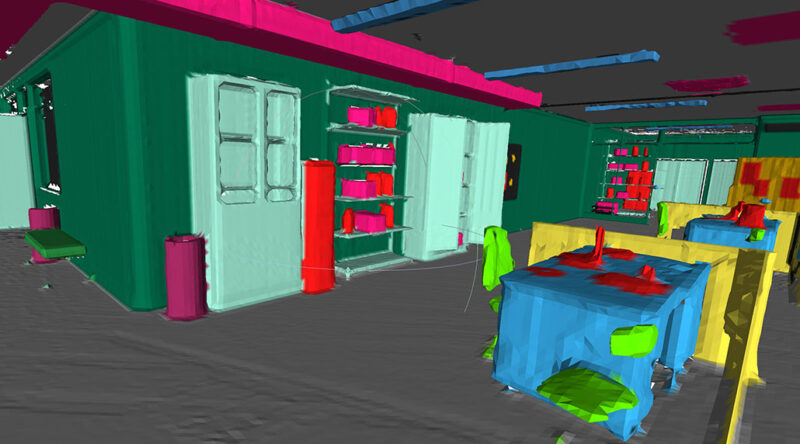

The MIT team has developed a system that can help solve this problem. They call it Kimera. “It’s a group of maps for robots,” says Rosinol. Those maps are nested into layers. The bottom layer is what most robots already create. It’s a map of the shape of the three-dimensional space around them. This shape has no meaning to a robot, however. All it sees at this level is a bumpy mass, as if the world around it were all the same color. It can’t pick out walls, doorways, walking people, potted plants or other things.

Kimera’s other layers add meaning. The second layer divides up the bumpy mass into objects and agents. Objects are things like furniture that don’t move around. Agents, such as people and other robots, do move. A third layer identifies places and structures. Places are open areas that the robot can move through, such as doorways or corridors. Structures are walls, floors, pillars and other parts of the building itself. The final two map-layers identify rooms and the entire building that those rooms belong to. A robot equipped with Kimera can build all these maps at once as it moves through some space. This should help it more easily find a direct path.

Task 3: Understand people

No matter what a robot tries to do, one big thing holds it back. Robots lack common sense. This is the knowledge that people don’t have to think or talk about because it’s so obvious to us. Thanks to common sense, you know that you should grab a lollipop by the stick and not by the candy. You know that doors are almost never located on the floor or ceiling. And so on.

Computer scientists have tried teaching robots common-sense rules. But even huge databases of these rules don’t seem to help much. There are just too many rules and too many exceptions. And no computer or robot understands the world well enough to figure out these rules on its own.

This lack of understanding makes grasping and navigating tougher. It’s also the main reason that virtual assistants sometimes say ridiculous things. Without common sense, an AI model can’t understand people’s words or actions, or guess what they’ll do next. “We want to build AI that can live with humans,” says Tianmin Shu, a computer scientist at MIT. This type of AI must gain common sense.

But how?

We learn a lot of common sense from our experiences in the world. But some common sense is with us and many other animals from birth, or soon after.

A July 2021 study in Science reported that an unborn mouse’s visual system is already active. It’s as if the mouse dreams about moving through the world before it ever opens its eyes. When it’s born, it already understands how to interpret the world around it as it moves.

Before they are 18 months old, human babies understand the difference between agents and objects. They also understand what it means to have a goal and how obstacles can get in the way of a goal. We know this because psychologists have run experiments to test babies’ common sense.

Based on these experiments, Shu decided to make a virtual world called AGENT. Like DexNet, AGENT is a training arena for AI models. The models practice by watching sets of videos that demonstrate a basic common-sense concept. For example, in one video, a brightly colored cube jumps over a pit to reach a purple cylinder on the other side. In the next two scenarios, that pit is gone. In one of them, the cube jumps anyway. In the other, it moves straight to the cylinder. Which of these decisions is more surprising?

Common sense says that you take the most direct route. Babies (and grown-ups) show surprise when they see an unnecessary jump. If an AI model gets rewarded when it shows surprise at the jump, it can learn to show surprise for similar scenarios. However, the model hasn’t learned the deeper concept — that people take the shortest path to a goal to save time and energy.

Shu knows this because he tried training a model on three types of common-sense scenarios. He then tested it on a fourth type. The first three scenarios contained all the concepts needed to understand the fourth. But still his model didn’t get it. “It’s still a grand challenge in AI to get a machine that has the common sense of an 18-month-old baby,” says Melanie Mitchell. She’s an AI expert at the Santa Fe Institute in New Mexico.

Task 4: Think of new ideas

In spite of these challenges, AI has come a very long way — especially in recent years. In 2019, AI models that had learned to recognize both dragons and elephants still couldn’t combine those into a new concept, an elephant dragon. Of course, a child can imagine and draw one quite easily. As of 2021, so can a computer.

Dall-E is a brand new AI model made by the company OpenAI. It turns a text description into a set of new, creative images. (The name “Dall-E” is a combination of the last name of Spanish artist Salvador Dali and the robot Wall-E, the star of a 2008 Disney movie.)

-

Human designers think up the words describing images that Dall-E should combine together. The AI model then came up with some incredibly cool elephant dragons. Which is your favorite? OpenAI -

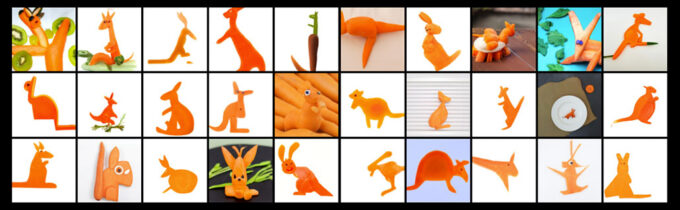

Some of Dall-E’s kangaroos made of carrots look a bit awkward. But for what it’s worth, most people probably wouldn’t make the best carrot art, either. OpenAI -

Who knew a confused jellyfish could be so adorable? OpenAI

OpenAI released a website on which people can pick combinations of words from a drop-down list and see what images Dall-E comes up with. Possible combos include a kangaroo made of carrots, a confused jellyfish emoji and a cucumber in a wizard hat eating ice cream. Have a look for yourself at https://openai.com/blog/dall-e/.

Dall-E doesn’t have common sense. So its imaginings aren’t always on target. Some of its carrot-kangaroos look more like awkward orange blobs. And if you tell it to draw a penguin wearing a green hat and a yellow shirt, all the pictures show penguins, although some sport yellow or red hats.

Sometimes though, Dall-E’s non-human way of seeing things is delightful and creative. For example, if you ask a person to imagine a shark playing chess, they’ll probably draw the shark using its fins as hands. In one of Dall-E’s images, a shark uses its tail instead. Someday, Dall-E or a similar model could help human artists and designers come up with a wider range of ideas. “So many things I’ve seen, I never would have thought of on my own,” says Ashley Pilipsizyn, who until recently was technical director at OpenAI.

It’s very likely that in the coming years someone will design a graceful robot or even an AI model with common sense. For now, though, if you want to beat a robot at chess, make it play on a real, physical chess board.

Kathryn Hulick, a regular contributor to Science News for Students since 2013, has covered everything from acne and video games to ghosts and robotics. This article was inspired by her new book: Welcome to the Future: Robot Friends, Fusion Energy, Pet Dinosaurs, and More. (Quarto, November 2021, 128 pages)