Facial expressions could be used to interact in virtual reality

This technology would allow people who can’t use handheld controllers to play virtual games

Interacting with virtual worlds could be as simple as changing your facial expression.

Benjamin Torode/Moment/Getty Images Plus

When someone pulls on a virtual reality headset, they’re ready to dive into a simulated world. They might be hanging out in VRChat or slashing beats in Beat Saber. Regardless, interacting with that world usually involves hand controllers. But new virtual reality — or VR — technology out of Australia is hands-free. Facial expressions allow users to interact with the virtual environment.

This setup could make virtual worlds more accessible to people who can’t use their hands, says Arindam Dey. He studies human-computer interaction at the University of Queensland in Brisbane. Other hands-free VR tech has let people move through virtual worlds by using treadmills and eye-trackers. But not all people can walk on a treadmill. And most people find it a challenge to stare at one spot long enough for the VR system to register the action. Simply making faces may be an easier way for those who are disabled to navigate VR.

Facial expressions could enhance the VR experience for people who can use hand controllers, too, Dey adds. They can allow “special interactions that we do with our faces, such as smiling, kissing and blowing bubbles.”

Dey’s team shared its findings in the April International Journal of Human – Computer Studies.

Facing off with new technology

In the researchers’ new system, VR users wear a cap studded with sensors. Those sensors record brain activity. The sensors can also pick up facial movements that signal certain expressions. Facial data can then be used to control the user’s movement through a virtual world.

Facial expressions usually signal emotions. So Dey’s team designed three virtual environments for users to explore. An environment called “happy” required participants to catch butterflies with a virtual net. “Neutral” had them picking up items in a workshop. And in the “scary” one, they had to shoot zombies. These environments allowed the researchers to see whether situations designed to provoke certain emotions affected someone’s ability to control VR through expressions.

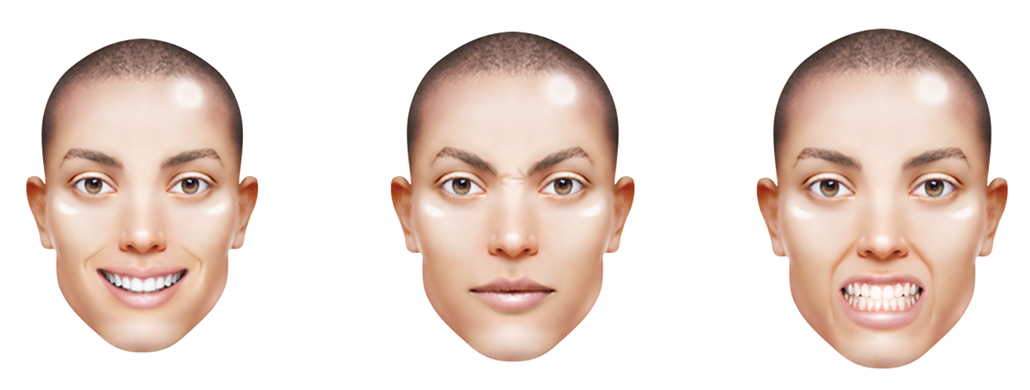

Eighteen young adults tested out the technology. Half of them learned to use three facial expressions to move through the virtual worlds. A smile walked them forward. A frown brought them to a stop. And to perform a task, they clenched their teeth. In the happy world, that task was swooping a net. In the neutral environment, it was picking up an item. In the scary world, it was shooting a zombie.

The other half of participants interacted with the virtual worlds using hand controllers. This was the control group. It allowed the researchers to compare use of facial expressions with the more common form of VR interaction.

After some training, participants spent four minutes in each of the virtual worlds. After visiting each world, participants answered questions about their experience: How easy was their controller to use? How “present” did they feel in that world? How real did it seem? And so on.

Using facial expressions made participants feel more present inside the virtual worlds. But expressions were more challenging to use than hand controllers. Recordings from the sensor-laden cap showed that the brains of people using facial expressions were working harder than those who used hand controllers. But that could just be because these people were learning a new way to interact in VR. Perhaps the facial expression method would get easier with time. Importantly, virtual settings meant to trigger different emotions did not affect someone’s ability to control their VR using facial expressions.

Work to be done

“This research pushes the boundary of hands-free interaction in VR,” says Wenge Xu. He studies human-computer interactions at Birmingham City University in England. He was not involved with the study. It’s “novel and exciting,” he says. But “further research is needed to improve the usability of facial expression–based input.”

The researchers are planning more tests and improvements. For instance, everyone in this study was able-bodied. In the future, Dey hopes to test the tech with people who are disabled.

He also plans to explore ways to make interacting through facial expressions easier. That may involve swapping out the sensor-laden caps for some other face-reading technology. One idea: “Use cameras that capture face movements or facial gestures,” he says. Or sensors could be embedded in the foam cushion of a VR headset. Dey imagines one day people will train VR systems using their own sets of expressions.

“Technology has helped me along the way [in a] world that wasn’t necessarily made for me,” says Tylia Flores. As a person with cerebral palsy, standard methods of interaction aren’t always available to her. She feels the new technology could make VR more accessible for people whose physical movements are limited.