Look into My Eyes

Computers are gaining the power to track precisely where you're looking.

By Emily Sohn

If you look deep into a friend’s eyes, you may imagine that you can see his or her thoughts and dreams.

But more likely, you’ll simply see an image of yourself—and whatever lies behind you.

Our eyeballs are like small, round mirrors. Covered by a layer of salty fluid (tears), their surfaces reflect light just like the surface of a pond does.

|

|

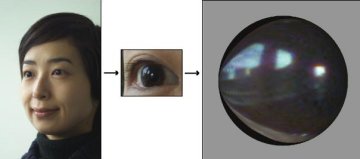

If you look closely into a person’s eye, you’ll see a reflection of the scene in front of the person. In this case, you also see the camera that took the person’s picture. |

| Ko Nishino and Shree Nayar |

From a distance, we see shiny glints in the eyes of other people, says Shree Nayar, a computer scientist at Columbia University in New York City. “If you look up close,” he says, “you’re actually getting a reflection of the world.”

By analyzing the eye reflections of people in photos, Nayar and his colleague Ko Nishino have figured out how to re-create the world reflected in someone’s eyes. Nayar’s computer programs can even pinpoint what a person is looking at.

|

|

After magnifying the right eye (middle) of the person shown at the left in this high-resolution photo, a computer can use the reflections in the eye (center) to produce an image of the person’s surroundings. In this case, you can see the sky and buildings |

| Ko Nishino and Shree Nayar |

Giving computers the power to trace our gaze could help them interact with us in more humanlike ways. Such a capability could help historians and detectives reconstruct scenes from the past. Filmmakers, video game creators, and advertisers are finding applications of Nayar’s research as well.

“This is a method that people hadn’t thought of before,” says Columbia computer scientist Steven Feiner. “It’s very exciting.”

Eye tracking

Eye-tracking technology already exists, Feiner says, but most systems are clunky or uncomfortable to use. Users often have to keep their heads still. Or they have to wear special contact lenses or headgear so that a computer can read the movement of the centers of their eyes, or pupils.

|

|

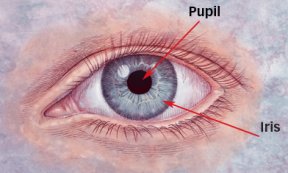

The pupil of the eye allows light in. The iris is the colored area around the pupil. The pupil and iris are covered by a transparent membrane called the cornea. |

Finally, under these circumstances, users know that their eyes are being followed. That may make them act unnaturally, which could confuse the scientists who study them.

Nayar’s system is far stealthier. It requires only a point-and-shoot or video camera that takes high-resolution pictures of people’s faces. Computers can then analyze these images to determine in which direction the people are looking.

To do this, a computer program identifies the line where the iris (the colored part of the eye) meets the white of the eye. If you look directly at a camera, your cornea (the transparent outer covering of the eyeball that covers the pupil and iris) appears perfectly round. But as you glance to the side, the angle of the curve changes. A formula calculates the direction of the eye’s gaze based on the shape of this curve.

Next, Nayar’s program determines the direction from which light is coming as it hits the eye and bounces back to the camera. The calculation is based on laws of reflection and the fact that a normal, adult cornea is shaped like a flattened circle—a curve called an ellipse.

|

|

Flattening a circle (left) produces a geometric figure called an ellipse (right). |

The computer uses all this information to create an “environment map”—a circular, fishbowl-like image of everything surrounding the eye.

“This is the big picture of what’s around the person,” Nayar says.

“Now, comes the interesting part,” he continues. “Because I know how this ellipsoidal mirror is tilted toward the camera, and because I know in which direction the eye is looking, I can use a computer program to find exactly what the person is looking at.”

|

|

From an eye reflection, a computer can generate an environment map, which produces an image of what’s in front of a person. |

| Ko Nishino and Shree Nayar |

The computer makes these calculations rapidly, and the results are highly accurate, Nayar says. His studies show that the program figures out where people are looking to within 5 or 10 degrees. (A full circle is 360 degrees.)

I spy

Nayar envisions using the technology to create systems that would make life easier for people who are paralyzed. Using only their eyes and a computer to track where they are looking, such people could type, communicate, or direct a wheelchair.

Psychologists are also interested in better eye-tracking devices, Nayar says. One reason is that the movements of our eyes can reveal whether we’re telling the truth and how we’re feeling.

Advertising experts would like to know which part of an image our eyes are most drawn to so that they could create more effective ads. Also, video games that sense where players are looking could be better than existing games.

|

|

It’s possible to figure out what a person is looking at from light reflected in an eye. In this case, the person is looking at a smiling face. |

| Ko Nishino and Shree Nayar |

Historians have already examined reflections in the eyes of people in old photographs to learn more about the settings in which they were photographed.

And filmmakers are using Nayar’s programs to replace one actor’s face with another’s face in a realistic way. Using an environment map taken from one actor’s eyes, the computer program can identify every source of light in the scene. The director then re-creates the same lighting on another actor’s face before digitally replacing that face with the first one.

Making computers that interact with you on your terms is another long-term goal, Feiner says.

Your computer could let you know about an important e-mail, for example, in a variety of ways. If you’re looking away, you might want the machine to beep. If you happened to be on the phone, a flashing light might be more appropriate. And if you’re looking at the computer screen, a message could pop up.

“The importance of this work is that it provides a way of letting a computer know more about what it is you are seeing,” Feiner says. It’s leading toward machines that interact with us in ways that are more like the ways in which people interact with each other.

Going Deeper: