A new audio system confuses smart devices that try to eavesdrop

Its algorithm messes with automatic speech-recognition systems to protect privacy

Secrets can fall prey to eavesdropping smart devices, not just nosy siblings or classmates. A new audio system could help better protect privacy.

Capuski/E+/Getty Images Plus

You may know them as Siri or Alexa. Dubbed personal assistants, these smart devices are attentive listeners. Say just a few words, and they’ll play a favorite song or lead the way to the nearest gas station. But all that listening poses a privacy risk. To help people protect themselves against eavesdropping devices, a new system plays soft, calculated sounds. This masks conversations to confuse the devices.

The smart devices use automated speech-recognition — or ASR — to translate sound waves into text, explains Mia Chiquier. She studies computer science at Columbia University in New York City. The new program fools the ASR by playing sound waves that vary with your speech. Those added waves jumble a sound signal to make it hard for the ASR to pick out the sounds of your speech. It “completely confuses this transcribing system,” Chiquier says.

She and her colleagues describe their new system as “voice camouflage.”

The volume of the masking sounds is not what’s key. In fact, those sounds are quiet. Chiquier likens them to the sound of a small air conditioner in the background. The trick to making them effective, she says, is having these so-called “attack” sound waves fit in with what someone says. To work, the system predicts the sounds that someone will say a short time in the future. Then it quietly broadcasts sounds chosen to confuse the smart speaker’s interpretation of those words.

Chiquier described it on April 25 at the virtual International Conference for Learning Representations.

Getting to know you

Step one in creating great voice camo: Get to know the speaker.

If you text a lot, your smartphone will start to anticipate what the next few letters or word in a message will be. It also gets used to what types of messages you send and the words you use. The new algorithm works in much the same way.

“Our system listens to the last two seconds of your speech,” explains Chiquier. “Based on that speech, it anticipates the sounds you might make in the future.” And not just sometime in the future, but half a second later. That prediction is based on the characteristics of your voice and your language patterns. These data help the algorithm learn and calculate what the team calls a predictive attack.

That attack amounts to the sound that the system plays alongside the speaker’s words. And it keeps changing with each sound someone speaks. When the attack plays along with the words predicted by the algorithm, the combined sound waves turn into an acoustic mishmash that confuses any ASR system within earshot.

The predictive attacks also are hard for an ASR system to outsmart, says Chiquier. For instance, if someone tried to disrupt an ASR by playing a single sound in the background, the device could subtract that noise from the speech sounds. That’s true even if the masking sound periodically changed over time.

The new system instead generates sound waves based on what a speaker has just said. So its attack sounds are constantly changing — and in an unpredictable way. According to Chiquier, that makes it “very difficult for [an ASR device] to defend against.”

Attacks in action

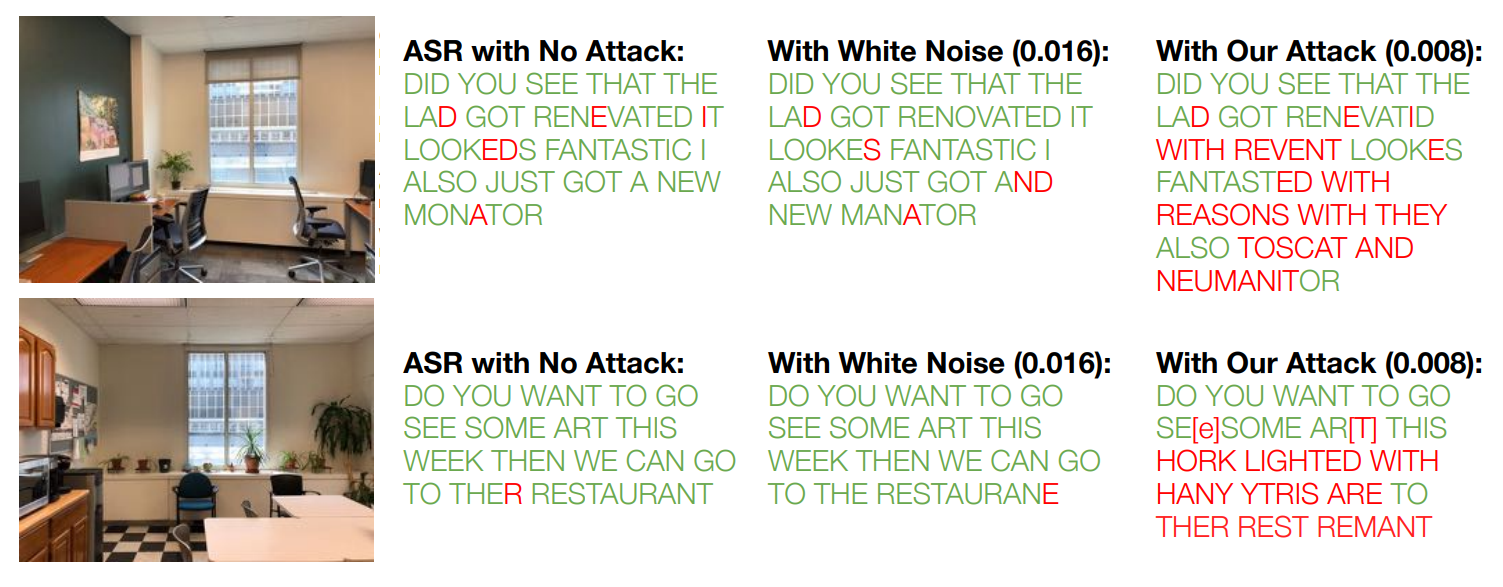

To test their algorithm, the researchers simulated a real-life situation. They played a recording of someone speaking English in a room with an average level of background noise. An ASR device listened in and transcribed what it heard. The team then repeated this test after they added white noise to the background. Finally, the team did this with their voice-masking system on.

The voice-camouflage algorithm kept ASR from correctly hearing words 80 percent of the time. Common words such as “the” and “our” were the hardest to mask. But those words don’t carry a lot of information, the researchers add. Their system was much more effective than white noise. It even performed well against ASR systems designed to subtract background noise.

The algorithm could someday be embedded into an app for use in the real world, Chiquier says. To ensure that an ASR system couldn’t reliably listen in, “you would just open the app,” she says. “That’s about it.” The system could be added to any device that emits sound.

That’s getting a bit ahead of things, though. Next comes more testing.

Sample results

The scientists tested their system in different rooms to mimic real environments. As their results show, the ASR almost always transcribed speech correctly when there was no attack. It was slightly confused by white noise, and much more confused by the new attack system. Here, sounds that were spoken and correctly transcribed by ASR appear green. Sounds that were not spoken and mistakenly transcribed by the ASR appear red. The white noise was played twice as loud as the new attack sounds.

This is “good work” says Bhiksha Raj. He’s an electrical and computer engineer at Carnegie Mellon University in Pittsburgh, Pa. He wasn’t involved in this research. But he, too, studies how people can use technology to protect their speech and voice privacy.

Smart devices currently control how a user’s voice and conversations are protected, Raj says. But he thinks control instead should be left to who’s speaking.

“There are so many aspects to voice,” Raj explains. Words are one aspect. But a voice may also contain other personal information, such as someone’s accent, gender, health, emotional state or physical size. Companies could potentially exploit those features by targeting users with different content, ads or pricing. They could even sell voice information to others, he says.

When it comes to voice, “it’s a challenge to find out how exactly we can obscure it,” Raj says. “But we need to have some control over at least parts of it.”